SONiC redefines networking with increased flexibility, cost reduction, and accelerated innovation, emerging as an essential solution for modern networking needs. It’s open-source model and containerized architecture allow organizations to customize the NOS and minimize dependency on proprietary solutions. Additionally, SONiC has been shown to enhance network performance in data centers. Continue reading to discover its origins and how leading organizations worldwide have built flexible, scalable, high-performing networks with SONiC.

What is SONiC?

SONiC, or Software for Open Networking in the Cloud, is an open-source network operating system (NOS) based on Debian Linux that runs on a variety of leading switch ASIC vendors. It offers a full suite of network functionality, like BGP and RDMA, that has been production-hardened in the data centers of some of the largest global cloud service providers. Initially developed by Microsoft and the Open Compute Project (OCP), SONiC has been moved to the Linux Foundation in 2022. This strategic move sought to leverage the foundation’s large developer and user pool in an effort to accelerate the development of the NOS for new use cases.

The Evolution of SONiC

SONiC was created in response to Microsoft’s challenges in managing its vast cloud-scale network, which connects all of its cloud services. Recognizing the need for increased control, agility, and flexibility, Microsoft developed SONiC to address these challenges. This included the freedom to determine the cadence of software updates and releases, as well as the ability to develop features using their own resources. Additionally, it provided more control over the software running on their switches and enabled better collaboration. The SONiC project was started as a solution for data center and enterprise networks but is now moving forward to support other use cases, including edge, carrier, AI, 5G, and telco. This contrasts with proprietary systems, which lack flexibility and often require users to depend on providers for updates and support. The disaggregated nature of open networking decouples hardware and software, fostering interoperability and accelerating innovation. Additionally, the use of white-box switches with off-the-shelf merchant silicon, coupled with the availability of SONiC as a free NOS, significantly drives down costs. This approach provides the same features and support you would expect from traditional vendors, but at a fraction of the cost, enabling organizations to achieve substantial reductions in both CapEx and OpEx.

What Makes SONiC Unique?

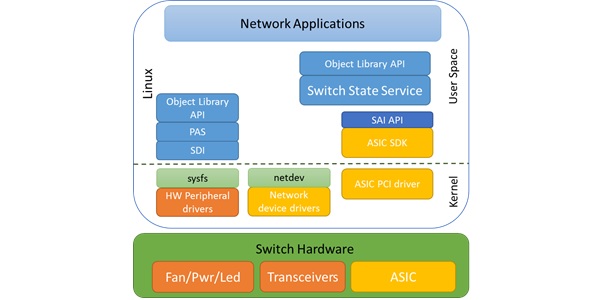

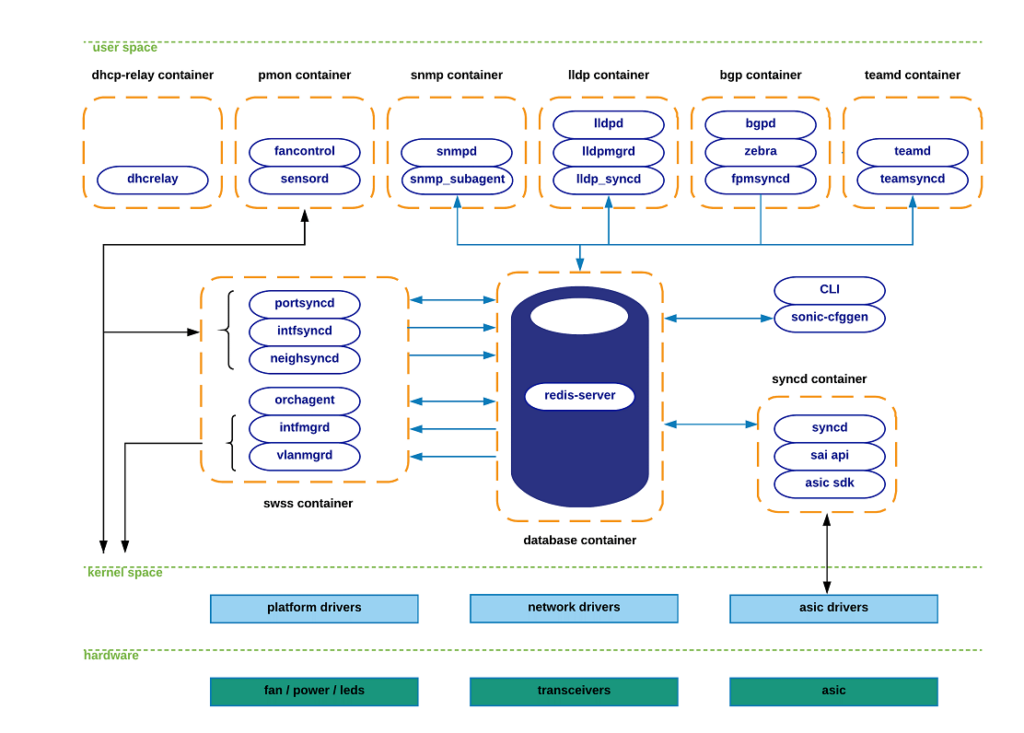

The biggest differentiator of SONiC is arguably its container-based microservices architecture, which allows seamless operation with disaggregated software components. This modular approach allows for easier scaling, upgrading, and management of these individual components without impacting the entire network. Each component can be developed, updated, and maintained independently. SONiC comprises several modules housed either in Docker containers or within the Linux-host system itself. Docker containers are lightweight, self-contained packages that include all necessary elements for running an application—code, runtime, system tools, libraries, and settings. In SONiC’s high-level architecture, it operates within the user space, where each module has a specific role. These include managing DHCP requests, handling Link Layer Discovery Protocol (LLDP) functions, providing a Command Line Interface (CLI), configuring system options, and running routing stacks like FRR or Quagga.

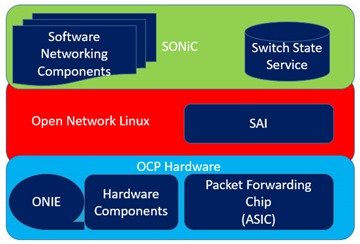

Another unique feature of SONiC is that it operates on the Switch Abstraction Interface (SAI), a unified API layer that promotes consistency across various hardware vendors. It is supported by leading switch ASIC vendors, including Broadcom (with its Trident, Tomahawk, Helix, and Jericho series), NVIDIA (Spectrum series), Marvell (Prestera, Falcon, and Teralynx 5 series), Cisco Silicon One, Intel Tofino, and Centec (Goldengate and TsingMa series), all of whom provide SDKs to support SONiC. The OCP community employs a collaborative hardware and software codesign strategy to ensure the ongoing development of hardware abstraction layers.

Today, SONiC boasts over 100 switch platforms and millions of ports deployed successfully in production globally, making it a hardened NOS with a broad ecosystem that is large and growing rapidly. This extensive ecosystem has attracted other players to develop management tools that operate on top of SONiC, allowing network operators to manage switches the same way they do servers, by leveraging familiar mechanisms for software deployment, network monitoring, and upgrades. SONiC’s open-source nature enables adaptability, scalability, and flexibility, facilitating collaboration among leading ecosystem hardware manufacturers, service providers, and enterprises. This collaborative ecosystem promotes a newfound level of cooperation and control that was previously unattainable which should help accelerate the development of the NOS.

The Architecture of SONiC

SONiC’s architecture is organized into a three-layer structure, ensuring robust performance and adaptability across diverse hardware configurations. Essentially, SONiC constitutes a compilation of kernel patches, device drivers, utilities, and additional components integrated with a Linux distribution.

At the time of this writing, SONiC utilizes the following Docker containers to compartmentalize its core functionalities:

- Teamd

- Pmon

- Snmp

- Dhcp-relay

- Lldp

- Bgp

- Database

- Swss

- Syncd

Teamd Container:

- Runs Link Aggregation functionality (LAG) in SONiC devices. “teamd” is a Linux-based open-source implementation of LAG protocol. “teamsyncd” process allows the interaction between “teamd” and south-bound subsystems.

Pmon Container:

- In charge of running “sensord”, a daemon used to periodically log sensor readings from hardware components and to alert when an alarm is signaled. Pmon container also hosts “fancontrol” process to collect fan-related state from the corresponding platform drivers.

Snmp Container:

- Hosts snmp features.

- Processes:

- Snmpd: Actual snmp server in charge of handling incoming snmp polls from external network elements.

- Snmp-agent (sonic_ax_impl): This is SONiC’s implementation of an AgentX snmp subagent. This subagent feeds the master-agent (snmpd) with information collected from SONiC databases in the centralized redis-engine.

Dhcp-relay Container:

- The dhcp-relay agent enables the relay of DHCP requests from a subnet with no DHCP server, to one or more DHCP servers on other subnets.

Lldp Container:

- As its name implies, this container hosts lldp functionality.

- Processes:

- Lldp: Actual lldp daemon featuring lldp functionality. This is the process establishing lldp connections with external peers to advertise/receive system capabilities.

- Lldp_syncd: Process in charge of uploading lldp’s discovered state to the centralized system’s message Infrastructure (redis-engine). By doing so, lldp state will be delivered to applications interested in consuming this information (e.g. snmp).

- Lldpmgr: Process provides incremental-configuration capabilities to lldp daemon; It does so by subscribing to STATE_DB within the redis-engine.

Bgp Container:

- Runs one of the supported routing-stacks: Quagga or FRR. Even though the container is named after the routing-protocol being used (bgp), in reality, these routing-stacks can run various other protocols (such as ospf, isis, ldp, etc).

- BGP container functionalities are broken down as follows:

- bgpd: regular bgp implementation. Routing state from external parties is received through regular tcp/udp sockets, and pushed down to the forwarding-plane through the zebra/fpmsyncd interface.

- zebra: acts as a traditional IP routing-manager; that is, it provides kernel routing-table updates, interface-lookups and route-redistribution services across different protocols. Zebra also takes care of pushing the computed FIB down to both kernel (through netlink interface) and to south-bound components involved in the forwarding process (through Forwarding-Plane-Manager interface –FPM–).

- fpmsyncd: small daemon in charge of collecting the FIB state generated by zebra and dumping its content into the Application-DB table (APPL_DB) seating within the redis-engine.

Database Container:

- Hosts the redis-database engine. Databases held within this engine are accessible to SONiC applications through a UNIX socket exposed for this purpose by the redis-daemon. These are the main databases hosted by the redis engine:

- APPL_DB: Stores the state generated by all application containers — routes, next-hops, neighbors, etc. This is the south-bound entry point for all applications wishing to interact with other SONiC subsystems.

- CONFIG_DB: Stores the configuration state created by SONiC applications — port configurations, interfaces, vlans, etc.

- STATE_DB: Stores “key” operational state for entities configured in the system. This state is used to resolve dependencies between different SONiC subsystems.

- ASIC_DB: Stores the necessary state to drive asic’s configuration and operation — state here is kept in an asic-friendly format to ease the interaction between syncd (see details further below) and asic SDKs.

- COUNTERS_DB: Stores counters/statistics associated to each port in the system. This state can be utilized to satisfy a CLI local request, or to feed a telemetry channel for remote consumption.

Swss Container:

- The Switch State Service (SwSS) container comprises of a collection of tools to allow an effective communication among all SONiC modules. If the database container excel at providing storage capabilities, Swss mainly focuses on offering mechanisms to foster communication and arbitration between all the different parties.

- Swss also hosts the processes in charge of the north-bound interaction with the SONiC application layer. The exception to this, as previously seen, is fpmsyncd, teamsyncd and lldp_syncd processes which run within the context of the bgp, teamd and lldp containers respectively. Regardless of the context under which these processes operate (inside or outside the swss container), they all have the same goals: provide the means to allow connectivity between SONiC applications and SONiC’s centralized message infrastructure (redis-engine). These daemons are typically identified by the naming convention being utilized: *syncd.

Portsyncd:

- Listens to port-related netlink events. During boot-up, portsyncd obtains physical-port information by parsing system’s hardware-profile config files.

Intfsyncd:

- Listens to interface-related netlink events and push collected state into APPL_DB. Attributes such as new/changed ip-addresses associated to an interface are handled by this process.

Neighsyncd:

- Listens to neighbor-related netlink events triggered by newly discovered neighbors as a result of ARP processing. Attributes such as the mac-address and neighbor’s address-family are handled by this daemon. This state will be eventually used to build the adjacency-table required in the data-plane for L2-rewrite purposes.

Teamsyncd:

- Previously discussed — running within teamd docker container. As in the previous cases, obtained state is pushed into APPL_DB.

Fpmsyncd:

- Previously discussed — running within bgp docker container. Again, collected state is injected into APPL_DB.

Lldp_syncd:

- Also previously discussed — running within lldp docker container.

Syncd container:

- In a nutshell, syncd’s container goal is to provide a mechanism to allow the synchronization of the switch’s network state with the switch’s actual hardware/ASIC. This includes the initialization, the configuration and the collection of the switch’s ASIC current status.

- These are the main logical components present in syncd container:

- Syncd: Process in charge of executing the synchronization logic mentioned above. At compilation time, syncd links with the ASIC SDK library provided by the hardware-vendor, and injects state to the ASICs by invoking the interfaces provided for such effect. Syncd subscribes to ASIC_DB to receive state from SWSS actors, and at the same time registers as a publisher to push state coming from the hardware.

- SAI API: The Switch Abstraction Interface (SAI) defines the API to provide a vendor-independent way of controlling forwarding elements, such as a switching ASIC, an NPU or a software switch in a uniform manner.

- ASIC SDK: Hardware vendors are expected to provide a SAI-friendly implementation of the SDK required to drive their ASICs. This implementation is typically provided in the form of a dynamic-linked-library which hooks up to a driving process (syncd in this case) responsible of driving its execution.

The Ecosystem

SONiC’s ecosystem is rapidly growing and evolving, with new vendors and deployments being added continually. This expansion is driven by the Open Compute Project and the SONiC community, which provide assistance and resources to those interested in deploying SONiC. As usage increases, further contributions support a broader range of switching ASICs, platforms, and advanced features like telemetry and automated management. Consequently, deploying SONiC in your network becomes increasingly accessible and attractive.

SONiC benefits from a robust and active community supported by a diverse network of contributors, including tech giants and industry stakeholders. This dynamic ecosystem fosters collaborative efforts and knowledge exchange, driving innovation and continuous enhancement of the SONiC platform.

Primary Objectives of SONiC

The primary objectives of the SONiC OS include enabling network operators to utilize world-class switching hardware across various network tiers. Utilizing a container-based architecture, SONiC facilitates the rapid deployment of new features and secure, reliable updates across the network within hours instead of weeks. This approach not only minimizes the impact on end users but also confines any failures to the specific software component involved, preventing widespread network disruption. This strategic containment enhances overall network stability and operational efficiency.

Final Thoughts

SONiC is a cloud-scale networking NOS that aims to be the Linux of network operating systems, emphasizing simplicity and scalability, aimed at providing network administrators with more control and agility. SONiC’s cloud-native design stands out as a significant advantage. It guarantees smooth integration with cloud-based applications, enabling organizations to unlock the full potential of these technologies.

Is SONiC Right for Your Organization?

SONiC could significantly enhance your network infrastructure; however, it also presents a set of challenges that require careful planning, skilled personnel, a deep understanding of its architecture, continuous support, and alignment with your organization’s strategic goals. To unlock its full potential, consider partnering with an experienced open networking specialist who can help you navigate these challenges.

Since 2017, we have been assisting organizations of all sizes with deploying disaggregated network infrastructure powered by Linux-based open networking software, including IP Infusion, Cumulus Linux, Pica8, and SONiC, alongside supported white box hardware platforms like Edge-Core and UfiSpace. Our seasoned experts are ready to provide ecosystem guidance, answer interoperability questions, offer lab access, help with hardware procurement, and address general SONiC inquiries. We also offer guidance on best practices, network design, proof of concept, and pilot projects. Contact us to discover how SONiC can benefit your organization.

Humza Atlaf, Network Engineer

Humza is a network engineer at Hardware Nation Labs, where his enthusiasm for Open Networking drives his work. With a blend of deep expertise and innovative approaches, he designs robust, scalable networks of the future. His practical experience includes configuring and deploying a range of protocols on L3 DELL Switches and Cisco Nexus 9K, such as LACP, VLANs, and VRRP. At his previous role, he was part of a SONiC testing team, further honing his skills in network setup and troubleshooting. Humza is also adept at network analysis with tools like Wireshark, enhancing his ability to manage complex network environments.

Alex Cronin, Solutions Architect

Alex is a solutions architect with over 15 years of broad infrastructure experience, specializing in white box networking and open-source infrastructure solutions. He aims to deliver solutions that are impactful, scalable, and cost-effective. Alex is a trusted advisor to organizations and IT leaders, helping them make informed decisions about their IT infrastructure and aligning technology with strategic ambitions.

References:

- SONiC Foundation: https://sonicfoundation.dev/resources-for-developers/ (Official website)

- SONiC Documentation: https://github.com/sonic-net/SONiC (Hosted on GitHub)

- FRRouting: FRRouting Project: frrouting.org (Routing software suite used by SONiC)

- Microsoft Announces SONiC: Microsoft Announces SONiC, a Debian-Based Linux Switch OS: https://www.techtalkthai.com/microsoft-announced-sonic-debian-linux-switch-os/ (TechTalkThai article)

- OCP Summit: Microsoft Offers SONiC Network Software: OCP Summit: Microsoft Offers SONiC Network Software: https://www.datacenterdynamics.com/en/news/ocp-summit-microsoft-offers-sonic-network-software/ (Data Center Dynamics article)