The network continues to be the bottleneck for large-scale AI workloads, and trillion-parameter models are pushing traditional infrastructure past its breaking point.

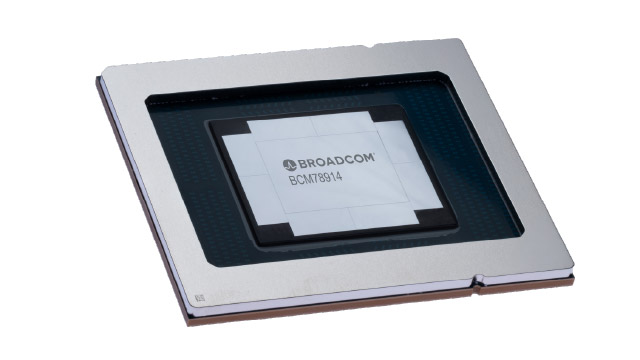

Over the past several months, Broadcom (NASDAQ:AVGO) has been rolling out the Tomahawk 6 switch silicon for both scale-up and scale-out solutions for hyperscalers to address these AI workload challenges. The introduction of the Tomahawk 6 ASIC, along with the TH6-Davisson CPO solution, has been the talk of OCP 2025 this past week.

At a Glance

Tomahawk 6 switch silicon delivers a staggering 102.4 Tbps of throughput with up to 512 ports of 200GbE or 128 ports of 800GbE. The architecture is designed to scale to 1.6T interconnects as the industry transitions beyond 800G. That’s double the throughput of its Tomahawk 5 predecessor, which debuted in 2022. Built on 3nm process technology, the chip delivers the industry’s highest port density at 512 ports, making it the highest-radix switch with industry-leading SerDes and enhanced power efficiency for AI/ML workloads.

Broadcom’s Tomahawk silicon has been the familiar merchant silicon sitting inside networking switch gear of many industry-leading vendors for more than a decade now. Over the past several years, Broadcom has been focusing on accelerating AI workloads, which is the stated goal of the TH6 silicon as well. What’s different, however, is the overwhelming scale of AI workloads, which is what Broadcom aims to address with the new silicon.

Credit: Broadcom

Scale-Up and Scale-Out Architecture

One of the biggest technical achievements of the TH6 is its ability to support both scale-up and scale-out networking requirements within the Ethernet network.

The new silicon supports scale-up clusters with 512 XPUs and scale-out networks with over 1 million XPUs in a two-tier topology. The 512-port density per chip is critical here. It enables you to build flat, low-latency clusters without the complex multi-tier spine-leaf architectures that add hops and microseconds. For scale-up AI training clusters, you can connect 512 accelerators with a single switch tier.

For massive scale-out deployments, TH6 lets you build 100,000+ XPU networks with just two tiers at 200 Gbps per link. Traditional data center switches would require three or four tiers to reach this scale, and each additional tier adds latency and potential congestion points.

The chip also supports arbitrary topologies, including Clos, rail-optimized, rail-only, and torus configurations. This flexibility means you can optimize for your specific workload characteristics rather than being locked into a single architecture.

Ultra-low Latency Packet Buffering

AI training workloads generate massive all-to-all communication patterns, such as all-reduce and all-gather collective operations. When thousands of GPUs or XPUs (TPUs, NPUs, or other AI accelerators) try to send data simultaneously, packet buffering becomes the bottleneck.

TH6 features the industry’s most advanced 102.4 Tbps packet buffer architecture. This isn’t just about total buffer size. It’s about buffer throughput and how quickly the switch can absorb microburst traffic without dropping packets or creating tail latency.

The architecture is specifically optimized for RoCEv2 and other RDMA protocols that AI frameworks depend on. RDMA is extremely sensitive to packet loss and reordering. Even a single dropped packet can stall an entire training job for milliseconds while the transport layer recovers.

By maintaining line-rate performance even under heavy congestion, TH6 keeps expensive GPU clusters productive instead of idle waiting for network recovery.

Advanced Congestion Management

AI workloads create low-entropy flows. Unlike web traffic or database queries with thousands of independent connections, AI training generates synchronized, elephant flows where many nodes send similar data patterns at exactly the same time.

Traditional load balancing fails here because standard ECMP hashing can’t effectively distribute these synchronized flows. Some paths end up overloaded while others sit idle.

TH6 includes hardware-based adaptive routing and dynamic load balancing designed specifically for these AI traffic patterns. The chip monitors congestion in real-time and steers flows away from hot spots automatically, maximizing network utilization.

It also supports end-to-end congestion control and hardware-based link failover. When a link fails, the switch reroutes traffic in hardware without waiting for control plane convergence. This keeps training jobs running and reduces job completion time, which directly translates to lower costs per training run.

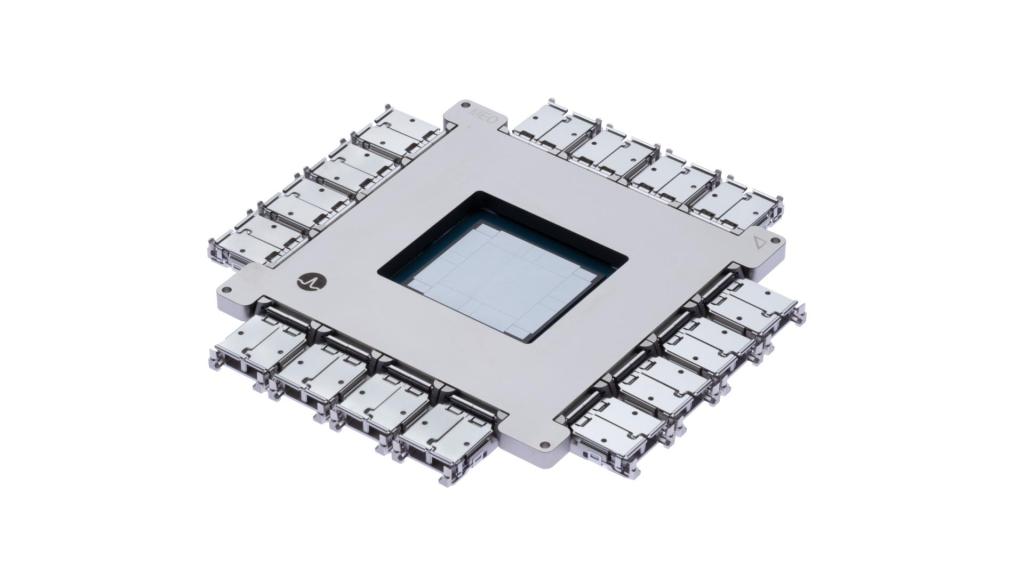

Tomahawk 6 Davisson Co-Packaged Optics Announcement

Broadcom announced on October 8, 2025, the week before OCP 2025, that it is now shipping TH6-Davisson, its third-generation CPO solution.

Traditional pluggable optics are hitting their limits at 102.4 Tbps speeds. They consume excessive power, introduce latency, and require larger system footprints. TH6-Davisson addresses these issues by directly integrating optical engines onto the same package as the Ethernet switch using TSMC COUPE (NYSE:TSM) technology. By shortening electrical paths and eliminating the need for additional DSPs and retimers, CPO significantly reduces power while enabling higher bandwidth density at the chip edge.

The result is a 70% reduction in optical interconnect power consumption compared to traditional pluggable solutions. For hyperscalers running massive AI clusters, this translates to millions of dollars in reduced energy costs.

Link stability is equally critical. Even minor link flaps can cause measurable drops in GPU utilization during training runs. By eliminating the manufacturing and test variability inherent in pluggable transceivers, the integrated CPO design significantly improves link stability and overall cluster reliability.

TH6-Davisson operates at 200 Gbps per channel and is fully interoperable with DR-based transceivers, LPO, and other CPO optical interconnects.

Credit: Broadcom

The Bottom Line

Tomahawk 6 addresses the network bottlenecks constraining AI infrastructure at scale. By maintaining line-rate performance during congestion bursts and providing unified Ethernet for both scale-up and scale-out, it keeps GPU clusters productive and reduces job completion times.

While competitors Marvell (Teralynx 10) and NVIDIA (Spectrum-X) currently offer 51.2 Tbps switch silicon and are developing their own co-packaged optics platforms, Broadcom’s leap to 102.4 Tbps with the TH6-Davisson CPO positions it a generation ahead in the switch silicon market.

Alex Cronin

Alex is a seasoned business leader and AI innovator with over 18 years of experience aligning enterprise IT solutions with business outcomes. A Georgia Tech alumnus, he has led open networking transformations across data centers, service providers, and hyperscalers. As the founder of Hardware Nation and now at the helm of Nanites AI, he’s partnering with major enterprises to build the world’s first closed-loop network autopilot powered by agentic AI. A featured speaker at CableLabs and a Top 12 finalist in the 2025 T-Mobile T-Challenge, Alex is a strong advocate for open infrastructure and autonomous systems.

He has partnered with industry-leading organizations including Deutsche Telekom, Accton, Accenture, Stanford, Georgia Tech, NASA, and Lockheed Martin, and has led hundreds of projects spanning startups to Fortune 500 enterprises.