Manufacturer: Mellanox Technologies Ltd

Manufacturer Part Number: MCX556M-ECAT-S25

Product Line: ConnectX-5

Product Name: ConnectX-5 Infiniband/Ethernet Host Bus Adapter

Marketing Information: ConnectX-5 Socket Direct with Virtual Protocol Interconnect® supports two ports of 100Gb/s InfiniBand and Ethernet connectivity, very low latency, and very high message rate, OVS and NVMe over Fabric offloads, providing the highest performance and most flexible solution for the most demanding applications and markets: Machine Learning, Data Analytics, and more.

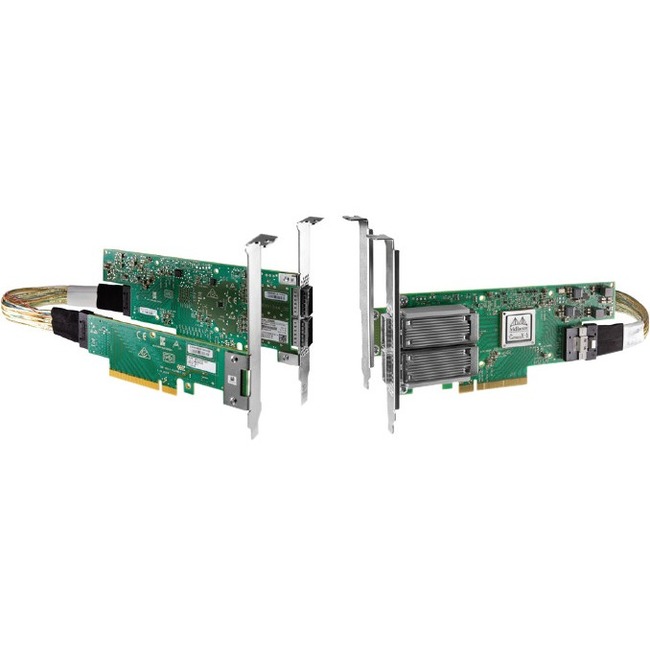

SOCKET DIRECT

ConnectX-5 Socket Direct provides 100Gb/s port speed even to servers without x16 PCIe slots by splitting the 16-lane PCIe bus into two 8-lane buses, one of which is accessible through a PCIe x8 edge connector and the other through a parallel x8 Auxiliary PCIe Connection Card, connected by a dedicated harness. Moreover, the card brings improved performance to dual-socket servers by enabling direct access from each CPU in a dual-socket server to the network through its dedicated PCIe x8 interface. In such a configuration, Socket Direct also brings lower latency and lower CPU utilization. The direct connection from each CPU to the network means the Interconnect can bypass a QPI (UPI) and the other CPU, optimizing performance and improving latency. CPU utilization is improved as each CPU handles only its own traffic and not traffic from the other CPU.

Socket Direct also enables GPUDirect® RDMA for all CPU/GPU pairs by ensuring that all GPUs are linked to CPUs close to the adapter card, and enables Intel® DDIO on both sockets by creating a direct connection between the sockets and the adapter card.

Mellanox Multi-Host™ technology, which was first introduced with ConnectX-4, is enabled in the Mellanox Socket Direct card, allowing multiple hosts to be connected into a single adapter by separating the PCIe interface into multiple and independent interfaces.

HPC ENVIRONMENTS

ConnectX-5 delivers high bandwidth, low latency, and high computation efficiency for high performance, data intensive and scalable compute and storage platforms. ConnectX-5 offers enhancements to HPC infrastructures by providing MPI and SHMEM/PGAS and Rendezvous Tag Matching offload, hardware support for out-of-order RDMA Write and Read operations, as well as additional Network Atomic and PCIe Atomic operations support.

ConnectX-5 VPI utilizes both IBTA RDMA (Remote Data Memory Access) and RoCE (RDMA over Converged Ethernet) technologies, delivering low-latency and high performance. ConnectX-5 enhances RDMA network capabilities by completing the Switch Adaptive-Routing capabilities and supporting data delivered out-of-order, while maintaining ordered completion semantics, providing multipath reliability and efficient support for all network topologies including DragonFly and DragonFly+.

ConnectX-5 also supports Burst Buffer offload for background checkpointing without interfering in the main CPU operations, and the innovative transport service Dynamic Connected Transport (DCT) to ensure extreme scalability for compute and storage systems.

Manufacturer Website Address: http://www.mellanox.com

Brand Name: Mellanox

Product Type: Infiniband/Ethernet Host Bus Adapter

Technical Information

Total Number of InfiniBand Ports: 2

Data Transfer Rate: 100 Mbit/s

Host Interface: PCI Express 3.0 x8

I/O Expansions

Number of Total Expansion Slots: 2

Expansion Slot Type: QSFP28

Physical Characteristics

Form Factor: Plug-in Card

Card Height: Low-profile

Height: 2.7″

Width: 6.6″

Miscellaneous

Package Contents:

- ConnectX-5 Infiniband/Ethernet Host Bus Adapter

- 25cm Harness

- Short Bracket

Environmentally Friendly: Yes

Environmental Certification: RoHS-6